The AI governance gambit: Scale your AI without making headlines

AI’s biggest challenge isn’t building powerful models — it’s governing them responsibly without stifling innovation. This article…

AI’s biggest challenge isn’t building powerful models — it’s governing them responsibly without stifling innovation. This article identifies five gaps that can hold organizations back from scaling AI and provides learnings with real-world examples to move from experimentation to production — helping to avoid costly mistakes.

Disclaimer: The examples provided in this article, including any people and organizations named, are hypothetical industry use cases and are not based on any specific Microsoft customer or real-world deployment. They are intended solely to illustrate common challenges and considerations in AI governance.

Julia Steen felt a knot tighten in her stomach as she scrolled through the company’s social media feed:

For the past six months, the respected product leader and engineer had spearheaded the development of an experimental AI-driven health assistant aimed at revolutionizing early cancer detection. Now, her project was at the center of a growing public backlash. She recalled during the final release readiness meeting when Janice, the Responsible AI lead, had expressed her concerns: “The app isn’t ready. We need to test on more diverse datasets. Two pilot customers are not enough to ensure clinical reliability.”

Julia had acknowledged the concerns but the pressure to launch was overwhelming. Preliminary results were promising, and delaying the release seemed to mean losing market advantage. Now, with public trust eroding, an upset management and a disheartened team, she wondered Was it worth it? and, more importantly, Could it have been avoided?

Julia’s situation may not be unique. As product teams move from conducting experiments to scaling their AI systems, similar stories are emerging across industries and organizations of all sizes. Reports from Gartner show that at least 30 percent of Generative AI projects are being abandoned. Additionally, a survey from CIO of more than 1,000 senior executives at large enterprises has revealed that 54 percent incurred losses due to failures in governing AI or ML applications, with 63 percent reporting losses of $50 million or more.

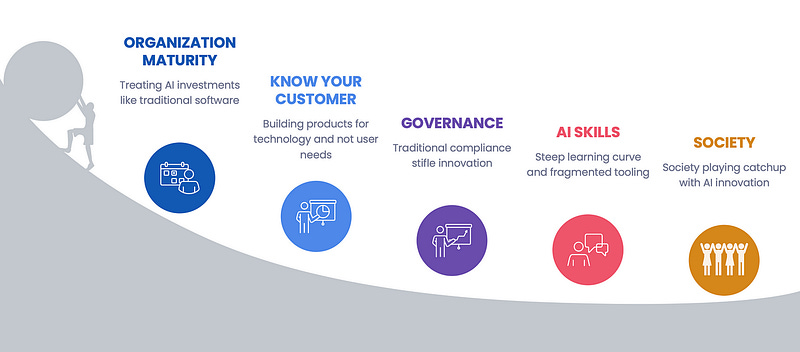

Mind the gap: Why does your AI struggle in the real world?

OpenAI’s ChatGPT, with its 300 million weekly users, makes AI success look effortless. But for most companies, the journey to scale AI is far more complex — especially as AI features are considered for business-critical products and processes. Teams deal with multiple challenges when transitioning AI systems from experimental phases to full-scale production. These challenges can be traced to several systemic gaps, including the following.

The organizational maturity gap

Successfully implementing AI requires more than just technological upgrades — it demands a fundamental shift in mindset. Organizations must align AI initiatives with business strategy, invest in talent, and, in some cases, rethink team structures to support efficient decision making. These are long-term investments, not short-term projects that can be defined with a simple “I want an AI chatbot” mission.

However, many organizations treat AI like traditional software cycle instead of embracing AI’s need for continuous learning and adaptation. AI systems are probabilistic, data-dependent, and highly sensitive to edge cases, making them fundamentally different from traditional software. Without robust infrastructure, high-quality data, and real-world testing, AI systems can fail unpredictably at any stage. Consider a hypothetical logistics company developing an AI-powered truck route optimization system. It may perform well in controlled conditions, but in real-world deployment, it could misinterpret constraints, routing trucks through residential areas, triggering public complaints and fines. These failures need to be monitored throughout the system lifecycle, and inadequate evaluation and a lack of continuous monitoring can leave teams unable to anticipate and mitigate emergent risks.

AI requires a mindset shift — from “code, test, ship” to “experiment, evaluate, adapt.”

The know-your-consumer gap

AI products must be built around user needs, not just technology. AI’s unpredictability means that teams can’t control every user scenario, making it vital to deeply understand user needs. Yet, many AI applications default to interfaces such as chatbots, often complicating experiences instead of simplifying them. A 2022 Zendesk report has found that 60 percent of users are disappointed with chatbots, citing inefficiency and the inability to choose between human or AI support. Consider a hypothetical healthcare provider that implements an AI chatbot for appointment scheduling. Despite using natural language and Large Language Model (LLM) technologies, many users find it inefficient, requiring multiple steps for tasks, which a simple online form or a call to the office previously handled more quickly. What it all comes down to is that AI systems succeed not by showcasing technology, but by meeting real user needs.

The governance gap

Governance is supposed to provide guardrails, but for product teams, it often feels like handcuffs — or worse, blinders. Traditional compliance prioritizes static policies like data privacy and access controls, yet they may fail to address the unpredictable and evolving nature of generative and agentic AI systems. This gap creates a dangerous paradox: Rules that either stifle innovation or allow unchecked risks to slip through.

The result? AI systems that spiral into chaos if governance fails to keep pace.

Imagine a financial institution that deploys an AI-powered chatbot to assist with customer service. Governance policies ensure data privacy compliance but might overlook or even prohibit real-time output monitoring and responsible AI oversight. The chatbot can offer misleading financial advice, breaching lending regulations. Fixing the fallout may require multi-stage approvals, delaying updates, frustrating customers, and exposing the organization to reputational risk. Governance that isn’t adaptive leaves organizations vulnerable to costly failures, regulatory scrutiny, and eroded trust. What this gets down to is that it’s no longer about what AI does — it’s about how we manage what it becomes.

As AI agents evolve, governance complexity increases exponentially. Unlike traditional models, AI agents may reason, plan, and act autonomously, often across multiple steps without human oversight. This raises risks such as unpredictable decision chains and dynamic adaptation, making static policies ineffective. Organizations need real-time monitoring to track AI system behaviors, detect anomalies, and intervene when actions deviate from expected norms.

The AI skills gap

Steep learning curves and fragmented tools leave product teams stuck while managing stakeholder expectations. The pace of innovation in AI has introduced new paradigms like prompt engineering, fine-tuning, and Retrieval Augmented Generation (RAG) — skills that require a deep understanding of user needs, data patterns, and model behaviors. The ecosystem is also fragmented, with tools like LangChain and Semantic Kernel as well as integrated platforms like Azure AI Foundry and Google Vertex AI that offer potential but may take time to drive standardization or seamless integration into existing engineering workflows. With no consistent best practices, teams are left playing catch-up with technology rather than focusing on delivering meaningful business outcomes. Hiring specialized skills is another hurdle. Building a team with expertise in AI takes time, data scientists and user design teams often operate at full capacity, and organizations can only shuffle internal resources so much before hitting their limits.

The society gap

AI holds immense potential, offering transformative possibilities across industries. Recognizing this, governments are working toward more forward-looking regulatory approaches to keep pace with rapidly evolving technology. Frameworks like the European Union AI Act and California’s AI Accountability Act aim to provide structure while ensuring regulations can adapt alongside innovation. As with HIPAA and GDPR, their effectiveness will be shaped by real-world application. For instance, the EU AI Act’s risk-based approach introduces categories like “high-risk” and “general-purpose” AI, but as models evolve — such as OpenAI’s O3 reasoning models, which enhance multi-step reasoning while becoming more efficient — these definitions may need continuous refinement. Research by Reuel & Undheim (2024) highlights the importance of governance frameworks that need to evolve alongside AI’s rapid advancements.

So, how do we navigate these complexities?

Rather than adding layers of static frameworks and assessments that may burden teams, organizations need an approach that is iterative, data driven, and adapts to evolving needs of the organization — a strategic gambit that recalibrates the “game board” for every move.

The AI governance gambit: Playing the long game

In chess, a gambit is a calculated move: Sacrifice a pawn to secure a stronger position. In AI governance, it’s about making deliberate, strategic choices — prioritizing the most impactful measures to smoothly transition from experimentation to production while ensuring business success and control. Let’s discuss some of these.

Opening moves

These initial, high priority moves establish your governance foundation. If you’re beginning your AI journey, this is where you want to focus first. If you are already on this journey, you want to look at these to determine current gaps that may hinder your scale. It might look daunting, but you must realistically assess the maturity of your team and understand market needs to utilize the best potential of AI for your use cases. You may decide to leverage the expertise of a consulting or strategy firm for an outside perspective.

Here are a few key questions to consider as a starting point:

How are our AI initiatives prioritized based on their potential impact on our company’s mission?

What measurable business value will be realized from our AI enablement long term to position us for a competitive advantage?

How do our projected costs of AI experimentation compare to the expected business value, and what milestones will validate further investment?

What is the opportunity cost of not adopting AI in the proposed areas?

Can our current infrastructure support initial AI experiments, or do we need to leverage a quick-start solution (e.g., Azure Cloud for free)?

Do we have access to the necessary data to start, and is it compliant with regulations?

What responsible AI considerations or potential risks could arise from our AI system, and how can we address them early in the development process?

Do we have the minimum set of skills or partners to start experimentation, and what gaps do we anticipate?

These questions provide the initial clarity needed to determine whether the AI investment holds genuine business value or is merely a technology experiment — and what’s required to set it up for success.

Hidden traps on the board: Avoid falling into these during the initial stage

Starting without clear business goals: AI is not the business goal. Instead, have a clear problem statement and business outcome. Don’t use AI for the sake of using AI.

Overstaffing the team prematurely: This means avoiding building large teams before understanding the scope and requirements of the project.

Choosing overly complex use cases: Selecting high-risk or customer-facing projects initially can overwhelm teams and increase failure risk.

Overlooking stakeholder alignment: Proceeding without securing buy-in from leadership or cross-functional partners (security, compliance, architecture boards) can derail progress as you start development.

Not Assessing Organizations Maturity: Investing in AI systems without doing an assessment and refinement of current organization’s infrastructure, engineering and data capabilities can lead to unexpected delays and poor-quality output.

Opening moves as described above can allow your organization to ensure that investments are aligned to business outcomes, that infrastructure is ready, that compliance, security, and responsible AI expectations are aligned from the beginning, and that skill gaps are identified. This foundation allows the start of confident experimentation, with vetted essentials to minimize risks and maximize impact.

Mid-game strategies

These moves focus on running diverse types of experiments to validate hypotheses, test infrastructure, and assess data readiness. They are cyclic by design: Each experiment generates learnings that inform subsequent iterations, coming closer to business validation and alignment to defined performance metrics. Unlike traditional software projects that progress from development to production, AI systems require an extended period of iterative experimentation and consistent evaluation. This phase is critical for building confidence and gathering insights necessary to scale effectively. The upshot here is that in AI, you are always experimenting.

You need to change your mindset from delivering code at milestones to graduating experiments to production.

Expect to stay in this phase while refining both technical and organizational readiness.

The outcomes from this stage provide answers to some of the following questions:

What are we learning about user behavior and needs from the feedback gathered during our experiments?

What do our experiment metrics (e.g., accuracy, efficiency, adoption rates) reveal about our system’s performance in real-world scenarios?

What patterns are emerging from experiment failures, and how can these inform our future iterations?

What lessons are we learning through observability about the scalability and adaptability of our AI solution across different use cases?

What social factors, societal biases, or unintended consequences are emerging during experimentation, and what do they reveal about gaps in our data, model design, or deployment strategy?

What infrastructure challenges have emerged during experimentation, and how do they shape our scalability plans?

What lessons are we learning about integrating AI systems with our existing business processes?

What gaps in skills or expertise have our experiments revealed within our teams?

Without clearly defined success metrics, teams risk running endless experiments with no clear path to production. The key to a strong mid-game is not just running experiments — it’s knowing when to graduate them to production.

Hidden traps on the board: Avoid falling into these in the mid-game stage

Running experiments without goals: Tests to “play with AI” may lead to wasted time and unclear outcomes. Every experiment should validate a clear hypothesis tied to business goals.

Neglecting feedback loops: Failing to involve domain experts or gather real user feedback results in experiments that don’t reflect real-world needs. Offline testing on a grounded data set is a good start but you need a combination of online-offline testing to ensure diverse coverage.

Scaling before learning: Jumping to scale up (more people, more resources) without proper evaluation testing for business outcomes or system performance will lead to wasted investments.

Delaying responsible AI considerations: Neglecting a holistic responsible AI approach can embed social and societal biases or unintended consequences into the system.

Compromising data integrity: Using low-quality or unethical datasets jeopardizes model performance and trustworthiness. Always vet sources for accuracy and compliance.

At this point you may be asking: Wait, so all my investment is going into experimenting?

It’s a fair concern. But experimentation is the cheapest way to validate ideas before making costly commitments. Mature AI teams don’t merely experiment — they run structured experiments that systematically de-risk AI adoption to ensure business alignment. Whether it’s using UX research to validate user behavior or evaluation with models for domain-specific feedback, organizations that treat AI experimentation as a learning engine can outperform those that jump blindly into production. What it all means is that the goal isn’t endless testing — it’s about failing fast to save costs or graduating to production to generate value.

This is also a culture move, because as experimentation matures, organizations organically build test beds, model benchmarks, data pipelines, simulation environments, and model playgrounds to accelerate iteration that works on their own data. Cross-functional teams become a norm — design, product, engineering, and domain experts ensure experiments are measured not just for feasibility but for business impact. The recommendation here is to start small on investments and keep scaling based on results.

End game mastery

As organizations make their end game moves, the focus shifts from experimenting to operationalizing — driving revenue, efficiency, and resilience. AI is no longer just an experiment; it’s now a core part of the business, adapting and improving with real-world use.

At this stage, organizations must track how AI creates business value and how effectively issues are identified and fixed to keep systems reliable and trustworthy. Evaluation testing, feedback loops and design partner programs ensure that systems stay relevant by learning from real users, while system telemetry and usage insights drive product improvements — creating a cycle of continuous learning and long-term value.

Rigorous, iterative evaluation is the foundation of trust in AI — ensuring your system performs reliably not just before deployment, but continuously as it scales in production.

The focus is on growth and constant adaptability to change:

What feedback loops and onboarding customer programs are in place to capture and act on user insights post-deployment?

How are we evaluating AI performance, and do our testing frameworks validate business value leveraging real time feedback?

How effectively are our teams trained to evaluate, optimize, and troubleshoot AI systems in production?

How well is our infrastructure designed to scale and adapt to future advancements, regulatory changes, and evolving user needs?

How are we leveraging AI observability practices to proactively mitigate risks and make data-informed product decisions?

What structured processes do we have in place to detect, analyze, and respond to unexpected AI outcomes or edge cases in real-world scenarios?

What systems do we have in place to monitor compliance and responsible AI practices for our deployed systems?

How effectively are we using red teaming and secure design practices to enforce AI guardrails, protect data privacy, and mitigate emerging threats?

Hidden traps on the board: Avoid falling into these in the end-game stage

Treating AI as a one-time deployment instead of as a living system: AI is still an evolving space, the foundational models are maturing, the tooling is evolving and so your AI systems also must continuously evolve. Without iterative updates and continuous evaluation testing, AI systems become obsolete, unreliable, or even harmful.

Neglecting customer feedback loops: AI systems must evolve with real-world use. Without structured feedback, errors compound until they explode into full-scale failures — damaging user trust and regulatory standing.

Not measuring business impact and value post-deployment: AI success isn’t just about model accuracy or reduced latency; it’s about real-world results. If AI models improve efficiency by 20 percent but don’t translate into revenue growth or cost savings, the initiative risks being deprioritized.

Ignoring AI Infrastructure investments: Without scalable test environments, evaluation loops, and data deployment pipelines, AI models risk stagnation and failure in real-world applications. Lack of automated testing and drift monitoring can lead to silent model degradation, reducing accuracy over time. GPU and compute constraints can prevent custom deployed models from scaling, while missing observability workflows slows iteration, causing delays in fixes and improvements. These gaps can result in higher operational costs, unreliable AI performance, and failure to meet business expectations.

Insufficient red teaming and responsible AI evaluations: AI can introduce bias, security risks, and adversarial vulnerabilities if not stress-tested before and after deployment. Additionally, testing the system on company compliance and government regulation is critical before deployment.

Going back to our example, what if Julia’s team had structured their AI governance differently? With end-game strategies in place, they could have proactively caught blind spots before they made headlines. Robust governance and real-time monitoring through services like Azure Content Safety would have detected misleading medical advice before it reached patients. Feedback loops and design partner programs could have surfaced blind spots in AI responses, ensuring the system learned from real-world use. By continuously tracking AI performance, adapting models based on real patient interactions, and setting up rapid response workflows, her team could have avoided a costly lawsuit and preserved trust in their AI-driven healthcare assistant.

In chess, mastery isn’t about predicting every move — it’s about having a strategy to stay in control. AI governance is no different. The best AI systems don’t just perform well; they are trusted, resilient, and continuously improving. Organizations that get governance right don’t just scale AI — they do it with confidence. Which one is yours?

Nikhil Sachdeva is on LinkedIn.