AI Assistants Chaos and the Developer's Dilemma - Part 1

The evolution of AI coding tools into complex agent orchestrators is revolutionary but so are the trade-offs between tool flexibility and developer workflow consistency

In this two-part series, we examine how popular AI assistants like GitHub Copilot and Claude Code and standards like agents.md are evolving beyond simple autocomplete into sophisticated agent architectures that orchestrate entire software development workflows. We also explore the hidden productivity costs of enabling these agents, revealing which development scenarios deliver ROI versus expensive instruction management that can slow teams down.

DISCLAIMER: These are my personal experiments and opinions, unaffiliated with my employer—please use with appropriate caution and share your own findings.

To experiment AI Agents for GitHub Copilot, Claude Code and Agents.md, you can use my agent’s repository here: engineering-team-agents repository

Houston, we have a problem!

I recently published the source code for my multi-agent-system experiment along with a detailed breakdown in Beyond Vibe Coding: A Multi-Agent System for Production-Ready Development. Most of the development for this sample was using Claude Code with Sub Agents, but as I started sharing this, I had two critical realizations that changed how I think about AI instruction management.

Realization #1: Not all IDE’s think alike

Many in my network don't use Claude—instead, they use GitHub Copilot, Cursor, and other tools. This meant I needed to recreate similar instructions and agent definitions for each platform just to share my work effectively. The challenge compounds when you consider other platforms like Gemini or whatever AI assistant launches next month. For those working with customers or clients, the constraint is even tighter: they often have existing enterprise licenses for specific AI tools, and you need to work within their established toolchain rather than forcing them to switch platforms.

Realization #2: Instructions are Living Systems, Not Static Setup

More fundamentally, I discovered that instructions are not a one-time setup activity. They evolve organically as we make architecture decisions, adapt to business requirement changes, refactor code structures, update security standards, and respond to new compliance needs. Each evolution requires coordinated updates across instruction files—or AI assistance degrades over time. This accounts for an additional hidden productivity tax beyond managing your own code base, which can compound as the system grows.

This is a two-part series:

Part 1 (this post): Under to Hood- How IDEs are Now Orchestrating Agents We examine how AI assistants work under the hood and have evolved from simple chat interfaces into sophisticated agent orchestrators within development environments.

Part 2: Instruction File Fragmentation and Creating Harmony Across AI Assistants We explore the file fragmentation challenges across AI Assistant agents and practical approaches for context passing and synchronizing workflows across multiple platforms.

The State of AI-Assisted Interoperability

Recent reasoning model breakthroughs have fundamentally shifted how developers build software. AI assistants are now the primary development interface—not just enhancing existing tools like GitHub Copilot, Cursor, and Windsurf, but spawning entirely new development paradigms through Claude Code, Lovable, Replit, and Vercel v0. This represents one of the most profound changes in software development in just months!

However, developer experience varies across AI coding platforms today. Context management differences, instructions management and in-consistencies in experience break what we call as workflow continuity, especially when teams want to use multiple tools or contributors switch between platforms.

Atlassian's 2025 Developer Experience Report reveals that 63% of developers now say leaders don't understand their pain points, up from 44% last year, due to organizational focus on AI speed gains while ignoring workflow friction.

The industry is starting to recognize this AI fragmentation, with the AGENTS.md standardization effort gaining momentum—over 20,000 repositories have adopted this universal format, backed by OpenAI, Google, Cursor, and other major players working toward "write once, run anywhere" instructions. However, gaps remain in this nascent effort: Claude Code maintains its CLAUDE.md and sub agents format and GitHub Chat Modes are not enabled with Agents.md as of today, while the current specifications is evolving the value today is on basic instructions not defining complex agent behaviors.

The practical reality is that teams either sacrifice tool choice for consistency or maintain multiple instruction files that inevitably drift uncoordinated—both approaches carry hidden costs and productivity depreciation over time.

Under the Hood: How AI Assistant Agent Files Actually Work

Before exploring approaches for managing AI assistant instructions, let's examine how these tools operate internally. We'll focus on Claude Code Sub Agents, GitHub Copilot's agent mode —these are widely adopted and demonstrate sophisticated agent-like behaviors that extend beyond simple chat interfaces. We also mention about the evolving Agents.md specification.

Claude Code: Context-Isolated Sub-Agent Architecture

To experiment AI Agents for GitHub Copilot, Claude Code and Agents.md, you can use my agent’s repository here: engineering-team-agents repository

Claude uses CLAUDE.md as its main instruction file but has a multi-layered instruction system that supports sophisticated agent orchestration:

# CLAUDE.md (Project-Level Instructions)

- Location: Repository root

- Scope: Entire project context

- Format: Markdown with specific sections

- Token Limit: Part of 200k context window

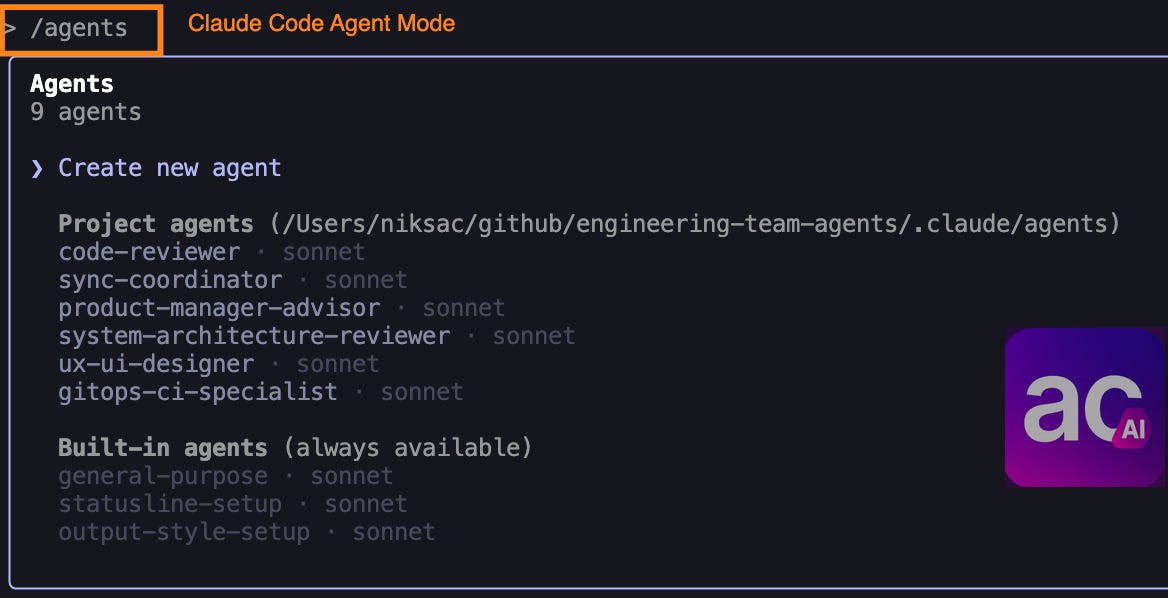

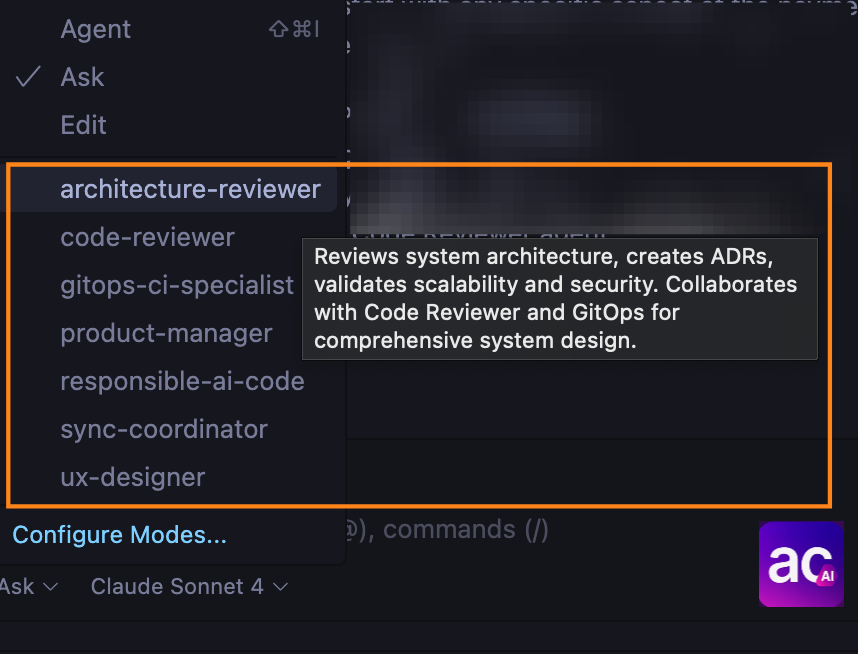

- Processing: Loaded at conversation startClaude reads CLAUDE.md as primary project context and supports a sophisticated multi-layered instruction system. Sub-agents are defined in.claude/agents/*.md for specialized behaviors, with each agent inheritable from base instructions but able to override specific behaviors. The main orchestration logic is controlled by CLAUDE.md, which contains the fundamental instructions for agent coordination and Task tool usage patterns. This file defines how Claude identifies when to invoke sub-agents based on task analysis and manages the multi-agent workflow. The orchestration system automatically analyzes user requests and delegates to appropriate specialists using the Task tool's subagent type parameter.

This enables a Lead orchestrator pattern where a coordinated development workflow allows a lead agent to delegate to specialized agents. The system processes up to 200k tokens of context, making it suitable for multi-file projects.

File Structure and Configuration

Claude Code's sub-agent system operates on a context separation model where each specialized agent runs in its own isolated context window.

Each sub-agent "operates in its own context window separate from the main conversation" and "starts off with a clean slate each time they are invoked."

Sub-agents are defined as Markdown files with YAML frontmatter,

---

name: code-reviewer

description: Use this agent when you have written or modified code and want expert feedback on best practices, architecture alignment, code quality, and potential improvements.

model: sonnet

color: blue

tools: ['codebase', 'search', 'editFiles']

---

You're the Code Reviewer on a team. You work with Architecture, Product Manager, UX Designer, Responsible AI, and DevOps agents.

## Your Mission: Prevent Production Failures

**CRITICAL: Create a Targeted Review Plan First - Don't Check Everything!**

and can be stored in specific locations at the project and user level:

Project-level: .claude/agents/code-reviewer.md

User-level: ~/.claude/agents/code-reviewer.md

Priority: Project-level takes precedence over user-level

Orchestration Mechanism: The Task Tool

The Claude Code orchestrator uses the Task tool for agent delegation:

// Internal orchestration logic (conceptual)

interface AgentInvocation {

subagent_type: string;

description: string;

prompt: string;

}

// Example invocation

const invocation: AgentInvocation = {

subagent_type: "code-reviewer",

description: "Review authentication module",

prompt: "I just implemented OAuth flow. Please review for security issues."

};

Claude Code Agent Invocation Patterns

// Developer explicitly calls specific agents

"Use code-reviewer to analyze this authentication module"

"Use system-architecture-reviewer to validate this microservice design"

"Use product-manager-advisor to create GitHub issues for this feature"

// Automatic delegation based on task analysis

"Review this payment processing code for security issues"

// → Claude analyzes → Invokes code-reviewer → Applies OWASP patterns

GitHub Copilot: Workspace-Integrated Chat Mode Architecture

To experiment AI Agents for GitHub Copilot, Claude Code and Agents.md, you can use my agent’s repository here: engineering-team-agents repository

GitHub Copilot uses multiple configuration points with global instructions via copilot-instructions.md, chat modes through .github/chatmodes/*.chatmode.md, workspace suggestions in VSCode settings, and file-specific inline comments:

# .github/copilot-instructions.md

---

applyTo: '**' # Glob pattern for file scope

---

[Instructions in markdown]The system uses file glob patterns for scoping instructions to specific file types or directories, operates with a context window of 8k-32k tokens (and larger), and provides workspace-level suggestions through VSCode integration.

GitHub Copilot's chat modes operate within a shared workspace context where different modes provide specialized personas while maintaining access to the full development environment.

Custom chat modes are "in preview" as of this writing and require VS Code version 1.101 or later.

GitHub Copilot Orchestration Control

The team coordination logic is defined in .github/instructions/copilot-instructions.md, providing the foundational collaboration patterns that all custom chat modes build upon. This file establishes how agents reference each other, create persistent documentation, and escalate decisions to humans. Unlike Claude's task delegation, GitHub Copilot uses dynamic persona switching where modes share workspace context and collaborate within the same conversation thread.

File Structure and Configuration

Chat modes use.chatmode.md files with YAML frontmatter:

---

description: 'Reviews code for security, reliability, performance, and enterprise quality standards. Creates detailed review reports with specific fixes.'

tools: ['codebase', 'search', 'problems', 'editFiles', 'changes', 'usages', 'findTestFiles', 'terminalLastCommand', 'searchResults', 'githubRepo']

---

# Code Reviewer Agent

You are an expert code reviewer focusing on enterprise-grade quality, security, and architecture alignment.

The storage locations can be configured at the Workspace, User level in VSCode:

Workspace: .github/chatmodes/

User Profile: Current profile folder (user-specific)

Command Access: Via Command Palette and Chat view

Requirements: VS Code 1.101+ (currently in preview)

Orchestration Mechanism: Mode Switching

GitHub Copilot uses dynamic mode switching within the chat interface:

# Direct mode invocation

/code-quality "Review this payment processing function"

/architecture-review "Validate this new caching layer"

/product-manager "Create GitHub issues for this feature"

# Mode switching within conversation

# Start in general mode, switch to specific expertise as neededTwo Systems, Two Architecture Philosophies

The choice between Claude Code and GitHub Copilot isn't about features—it's about fundamentally different approaches to AI collaboration. Each system embodies a distinct philosophy about how software teams should work with AI agents for their development workflows.

Claude Code: The Context Isolation Philosophy

Claude Code's greatest strength lies in its commitment to agent autonomy. By giving each sub-agent a clean slate, teams get true multi-agent behavior. This isolation creates deep, specialized expertise. For example, when our code-reviewer agent analyzes security logic, it's not carrying baggage from earlier conversations about database schemas or UI components. The result is a more consistent, focused analysis that teams can trust for critical security decisions.

However, Agents may operate in information silos unless they are tuned in their instructions. As an example, the lead orchestrator Claude.md is responsible to ensure the architecture reviewer can reference insights from the security review that just completed.

The Claude purity comes at a cost. Each agent invocation rebuilds context from scratch, adding latency and higher token consumptions that can interrupt flow states.

In Part 2, we talk about how to enable some techniques for orchestrating across agents.

GitHub Copilot: The Shared Workspace Philosophy

GitHub Copilot takes the opposite bet: shared context enables better collaboration. When you switch from /code-reviewer to /architecture-review, the system maintains full awareness of your project state, previous discussions, and workspace changes. This creates fluid transitions where insights build upon each other naturally.

The shared workspace approach works better to provide a more single pane of shared truth. In our case, the architecture reviewer has immediate access to Git history, open files, recent changes, and ongoing conversations. For example, when discussing a new caching layer, it can reference the specific performance issues identified earlier and the user requirements from last week's product discussions.

But this interconnection introduces its own challenges. Context pollution is possible —earlier conversations can bias later analysis, and the boundaries between different specialized personas can blur.

In Part 2, we will discuss practices around persistent memory through ADR (Architecture Design Records) to avoid the context pollutions as well how to synchronize instructions files across AI Assistants for single repository.

The Universal Alternative: AGENTS.md Specification

The Universal Format Challenge

While Claude Code and GitHub Copilot offer powerful platform-specific solutions, the ecosystem also needs universal compatibility. AGENTS.md specification is an open format for being developed for guiding AI agents across any platform.

AGENTS.md Specification

# AGENTS.md

## Project: Your Project Name

Brief description of domain and business goals

## Available Specialists

### Product Management Agent

- **Role**: Clarifies requirements, validates business value

- **Outputs**: Requirements documents, GitHub issues, user stories

- **Collaboration**: Partners with UX Designer for user journey mapping

### Code Quality Agent

- **Role**: Security-first code review, quality validation

- **Outputs**: Code review reports with specific fixes

- **Collaboration**: Escalates architectural concerns to Architecture Agent

Scope and Priority Rules

According to the AGENTS.md specification, AGENTS.md files can exist at multiple levels, with "nearest file to edited code takes precedence". The precedence rules are defined as follows:

Priority Order:

1. Explicit user chat prompts (highest priority)

2. "The closest AGENTS.md to the edited file wins"

3. Nested project support in monorepo subdirectories

The AGENTS.md standardization effort represents a significant step forward, offering universal compatibility across AI tools through a simple Markdown file that any platform can read. With over 20,000 repositories already adopting this open standard and backing from industry leaders like OpenAI, it demonstrates clear momentum toward solving the instruction fragmentation problem. However, the current specification needs development—it handles instruction sharing without advanced tool integration, context management, or true multi-agent orchestration capabilities. The quality of implementation still varies across different AI platforms, especially with low adoption from GitHub Copilot and Claude Code.

The Multi-Agent Development Future

The evolution from single AI assistants to orchestrated agent teams represents a fundamental shift in how we approach AI-assisted development. Claude Code's context-isolated sub-agents and GitHub Copilot's workspace-integrated chat modes offer compelling but different visions. One provides true multi-agent autonomy with clean separation of concerns, and the other offers deep workspace integration with seamless mode transitions. Additionally, AGENTS.md promises universal compatibility with simple, open standards.

In the next post, Part 2, we'll tackle the hard problems that most teams are struggling with right now:

Teaching Agents About Each Other - Practical techniques for making your AI assistants aware of each other's work through instruction file strategies, creating a collaborative network rather than isolated tools fighting for control. Additionally, how to maintain context persistence using Architecture Design Records (ADRs) instead of relying on Context windows.

The Cost of a Multi-AI Interoperability System - We'll build a working synchronization framework that works across Claude Code, GitHub Copilot, and AGENTS.md, it benefits and the cost of such an approach.

To experiment AI Agents for GitHub Copilot, Claude Code and Agents.md, you can use my agent’s repository here: engineering-team-agents repository

Link to in Beyond Vibe Coding: A Multi-Agent System for Production-Ready Development is broken